Unregulated AI has made the 2026 midterms a chaotic digital battlefield, with federal agencies scrambling to combat a tidal wave of deceptive deepfakes.

What to Know

- AI-generated deepfakes, including fabricated audio and video of candidates, are emerging as a widespread tactic in the 2026 election cycle.

- The Federal Election Commission remains divided along partisan lines and has failed to establish clear guidelines governing AI use in campaign advertising.

- The Federal Communications Commission has prohibited AI-generated voices in robocalls, but the rule does not extend to digital ads, television, or social media content.

- Experts caution that the objective of deepfakes is not only deception, but the gradual erosion of public trust in all political information.

- Without a coordinated federal response, a regulatory vacuum persists, allowing campaigns and outside groups to deploy AI-driven disinformation with few immediate legal repercussions.

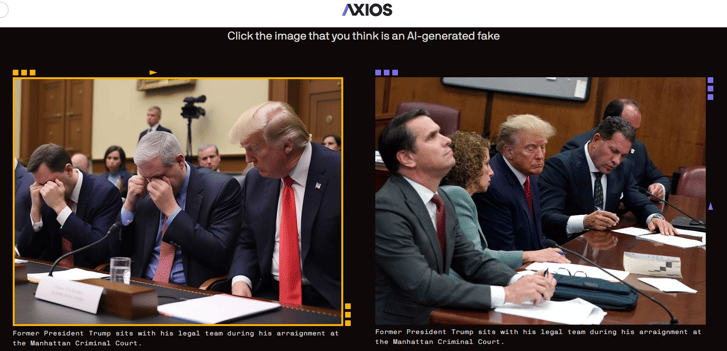

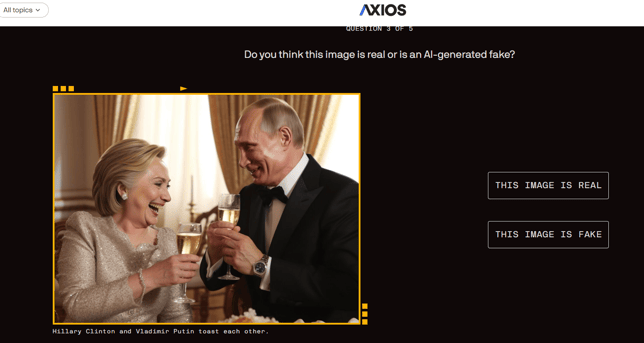

What began as a theoretical warning has become an operational reality in American politics. As reported by AXIOS in its feature “Can You Trust Your Eyes? The Deepfake Election,” AI-generated images and synthetic media have evolved from novelty tools into photorealistic instruments capable of distorting political reality. Now, heading into the 2026 midterms, those capabilities are no longer experimental. They are being deployed in live campaign environments.

Example of a deepfake; made by Gemini

The problem is accelerating faster than regulation. According to Max Rieper’s 2026 Technology & Privacy analysis on evolving state AI laws, lawmakers have made progress targeting sexual deepfakes and requiring disclaimers on manipulated political advertising, but constitutional challenges and uneven state standards have limited enforcement. Meanwhile, the Federal Election Commission (FEC) remains stalled on comprehensive AI advertising rules, and federal action has been largely piecemeal.

A Deadlock at the Top

The agency most directly responsible for overseeing the fairness of political campaigns has been paralyzed by inaction. The FEC, the body tasked with interpreting and enforcing campaign finance law, has failed to issue clear guidance on the use of AI in political advertising. As reported by AXIOS, the commission has been stuck in a partisan deadlock, unable to reach a consensus on how to handle the deceptive potential of deepfakes.

Screenshot of example question on AXIOS to discern AI content from website

The result is a widening regulatory gap. Fabricated audio of candidates, convincingly altered videos, and digitally manipulated political ads are circulating in an information ecosystem where the burden increasingly falls on voters to verify what they see. As AXIOS highlights, the greater danger is not simply deception, but erosion of trust itself. When synthetic media becomes indistinguishable from authentic footage, the democratic process faces a more destabilizing threat: an electorate unsure of what, or whom, to believe.

This regulatory drift has not occurred in silence. For more than a year, prominent watchdog organizations have pressed the FEC to clarify how existing election law applies to AI-generated political content. Public Citizen filed a formal petition asking the FEC to affirm that long-standing prohibitions on “fraudulent misrepresentation” extend to deepfake campaign ads.

Protect Democracy, working alongside the Campaign Legal Center, urged the commission to use its current authority to regulate deceptive AI-driven communications. The Brennan Center for Justice similarly called on the FEC to initiate a rulemaking process specifically targeting deliberately deceptive AI-produced campaign content.

Lawmakers have reinforced that pressure. A coalition of 50 Democratic members of Congress, led by Senator Adam Schiff, submitted a letter supporting Public Citizen’s petition and warning that voters increasingly struggle to distinguish authentic material from fabricated media.

Senator Adam Schiff, image via X (Twitter)

The FEC has acknowledged these concerns and sought public comment. It has issued interpretive guidance clarifying that existing rules against fraudulent misrepresentation apply regardless of the technology used. However, the commission has stopped short of creating specific AI-focused regulations, choosing instead to evaluate complaints on a case-by-case basis.

With the agency split evenly between three Democratic and three Republican commissioners, consensus on broader rulemaking has proven difficult, often dividing along familiar lines between free speech protections and fraud prevention. Meanwhile, the Federal Communications Commission (FCC) has proposed narrower disclosure requirements for AI-generated political content in broadcast television and radio ads, but those rules do not extend comprehensively across digital platforms.

Political Deepfake Legislation Across Jurisdictions

In the absence of comprehensive federal standards governing AI in elections, states have advanced a range of political deepfake laws aimed at deterring deception and protecting voters. These measures reflect differing regulatory philosophies, including criminal penalties, disclosure mandates, and defined liability structures for AI-generated campaign content.

Maryland SB0141

This law criminalizes the use of AI-generated deepfakes to spread election misinformation. It authorizes the removal of deceptive content and imposes fines and potential prison sentences for individuals who knowingly distribute manipulated media intended to mislead voters.

Missouri SB 509

This proposal would require political advertisements that use artificial intelligence to include clear disclaimers. It also defines AI systems as nonsentient entities, ensuring they cannot hold legal personhood and placing legal responsibility on the individual directing the AI for any resulting harm.

Texas SB753

Texas prohibits the publication of a deepfake video intended to influence voters within 30 days of an election. This represents a prohibition-based regulatory model that restricts certain deceptive synthetic media during a defined pre-election window.

California AB-2355

California enacted a series of laws, including AB 2355, AB 2655, and AB 2839, requiring disclaimers on political advertisements containing AI-generated or materially altered content and seeking to hold platforms accountable. Portions of these laws have faced constitutional challenges and have been partially struck down in court.

NO FAKES Act H.R.2794

Reintroduced in 2025, this bipartisan federal legislation establishes a right of publicity protecting individuals from the unauthorized use of their likeness or voice in deepfakes and digital replicas. Although not limited to elections, it provides a legal framework that could be applied to synthetic media involving political figures.

Regulatory Fragmentation

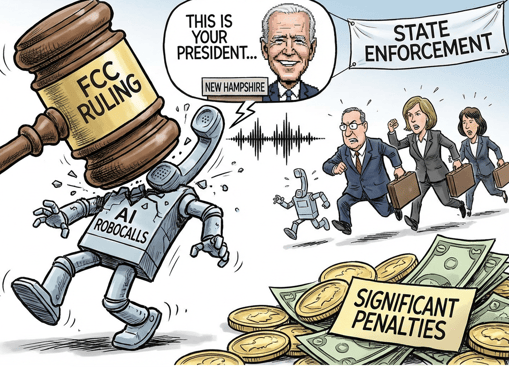

While the FEC remains gridlocked on AI campaign rules, the Federal Communications Commission has acted within its lane. In February 2024, the FCC ruled that AI-generated and voice-cloned audio qualifies as an “artificial or prerecorded voice” under the Telephone Consumer Protection Act, meaning robocalls and robotexts using synthetic voices require prior express consent and full TCPA compliance.

|

A Closer Look at the TCPA The Telephone Consumer Protection Act (TCPA), codified at 47 U.S.C. § 227, is a federal statute that restricts the use of automated telephone dialing systems, artificial or prerecorded voice messages, unsolicited fax advertisements, and certain telemarketing practices without prior express consent. The law establishes consumer privacy protections, creates a private right of action with statutory damages, and authorizes both federal and state enforcement against violators. |

The decision followed a rise in AI-powered robocall complaints, including a deepfake of President Biden’s voice targeting New Hampshire voters. The ruling allows state attorneys general to pursue enforcement and exposes violators to significant penalties.

However, the FCC’s authority is limited to telecommunications. The rule does not cover AI-generated videos or manipulated content on social media, streaming platforms, or digital ads. As a result, while robocalls now fall under clearer regulation, much of the broader deepfake ecosystem remains largely unregulated at the federal level.

The Erosion of Trust

The true danger of AI deepfakes extends beyond simply fooling a voter in a single instance. The ultimate goal of many disinformation campaigns is more insidious: to create an environment of such pervasive distrust that citizens begin to question the validity of all information, including legitimate news and authentic recordings. When anything can be faked, it becomes easier for bad actors to dismiss genuine evidence of wrongdoing as just another "deepfake," a phenomenon known as the "liar's dividend."

Screenshot of another example of an AI-generated image by AXIOS

This creates a crippling challenge for campaigns and election officials. A candidate targeted by a deepfake must spend precious time and resources not on debating policy, but on debunking a lie. By the time a fabrication is proven false, it has already spread widely, and the damage to the candidate's reputation may be irreversible.

More broadly, it poisons the well of public discourse. If voters cannot agree on a basic set of facts, the very foundation of a functioning democracy begins to crumble. The 2026 midterms are serving as a real-time experiment in just how much damage this technology can inflict on public trust.

Wrap Up

The fractured and sluggish response from federal regulators has effectively turned the 2026 election cycle into the "Wild West" of AI-driven disinformation. In the absence of clear rules and harsh penalties, there is a powerful incentive for unscrupulous campaigns and foreign adversaries to push the boundaries of ethical conduct.

The current environment rewards those willing to deceive, while putting honest campaigns at a distinct disadvantage. This is not a sustainable or healthy situation for a democracy, and it sets a deeply worrying precedent for the even higher-stakes presidential election in 2028. At the end of the day, the challenge of AI deepfakes is not merely a technological one; it is a profound test of our democratic institutions and our collective commitment to a fact-based reality. Without a swift and comprehensive strategy that includes clear regulations, robust enforcement, and a nationwide effort to improve media literacy, we risk entering an era where truth is subjective and elections are won not by the best ideas, but by the most convincing lies. The integrity of every future election depends on confronting this threat head-on, before the trust that underpins our system is eroded beyond repair.