As states rush to regulate AI in political advertising, campaigns using generative tools face a growing patchwork of laws with steep penalties, making internal compliance a critical operational challenge.

What to Know:

- A growing number of states, now at least 26, have enacted laws regulating the use of artificial intelligence in political advertising.

- These laws, like Maine's proposed LD 517, typically require clear disclaimers on ads that are "materially deceptive," such as deepfake videos or audio.

- Penalties can be severe, with potential fines of up to five times the cost of the ad buy, creating significant financial risk for non-compliant campaigns.

- Liability for violations falls on the ad’s creator, the campaign or its vendors, not on the TV stations or platforms that run the ad.

- Most laws are narrowly focused on paid advertising, creating a potential gray area for unpaid, viral content shared on social media.

Generative artificial intelligence is offering political campaigns a powerful new arsenal of creative tools, promising unprecedented speed in producing everything from ad scripts to video content. But as campaigns race to adopt this technology, state legislatures are racing to regulate it, creating a complex and hazardous new compliance landscape for political advertisers. With at least 26 states now having laws on the books to govern AI in elections, the risk of running afoul of disclosure rules is no longer a hypothetical problem for the future; it is a clear and present danger.

A proposed law in Maine, detailed in a recent report by NEWS CENTER Maine, serves as a perfect case study for the kinds of regulations campaigns will face nationwide. The bill, LD 517, highlights the central challenge for the 2026 cycle: how to leverage the power of AI for creative production without incurring crippling financial penalties or triggering a public relations disaster. For campaign managers and media consultants, understanding this evolving patchwork of state laws is now a core operational necessity.

The Expanding Patchwork of State Laws

The central goal of the legislative push across the country is to ensure transparency and prevent voters from being fooled by AI-generated deepfakes. These laws are designed to address content that is "materially deceptive," which generally means using AI to depict a real person saying or doing something they did not, in a way that could mislead a reasonable viewer.

Maine’s LD 517 provides a clear blueprint for what this looks like in practice. The bill would require a political ad that creates a “materially deceptive or fraudulent political communication” to carry a specific disclaimer: “This communication contains audio, video, and/or images that have been manipulated or altered.” The definition is intended to capture everything from a fake audio recording of a candidate to a video that has been altered to place an opponent in a compromising or false situation.

For campaigns, this means that even minor AI alterations that could be perceived as deceptive will require a disclaimer. The threshold is not about the sophistication of the technology used, but about the potential for the end product to mislead the public. As AI tools become more integrated into standard video and image editing software, the risk of inadvertently creating content that meets this definition will only grow.

Where the Risk Lies: Creators, Not Platforms

One of the most critical details for campaigns to understand is where legal liability rests. The Maine bill, in a provision echoed in other states, makes it clear that the responsibility for compliance falls squarely on the creator of the content, not the conduit that distributes it.

As the Maine Association of Broadcasters argued in support of the bill, any penalties for violations should be “confined to the creators of the content and not the conduit for how its broadcasted.”

Screenshot of MAB logo via website

This means a campaign or its consulting firm cannot shift the blame to the local television station that aired the ad or the digital platform that hosted it. The entity that produced and paid for the ad is the one on the hook. The financial stakes are substantial. LD 517 proposes a fine that could be equal to, or up to five times, the total cost of the advertisement.

For a campaign running a significant statewide ad buy, this could easily translate into a six or seven-figure penalty, an amount that could be financially fatal in the final stretch of a close race. This penalty structure is designed to be a powerful deterrent, forcing campaigns to take compliance seriously from the outset.

The Paid vs. Unpaid Media Divide

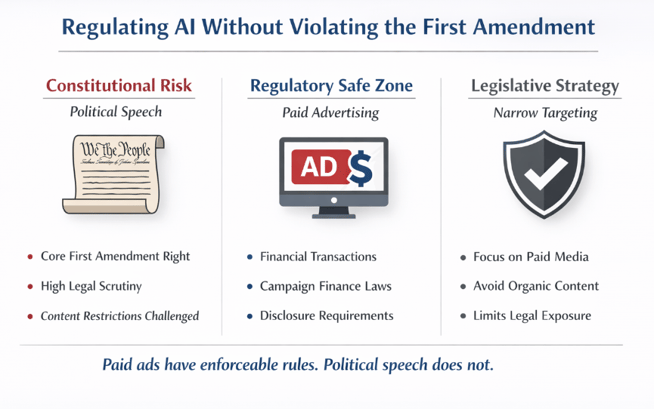

In a deliberate effort to avoid First Amendment challenges, most of these new AI regulations are narrowly tailored to focus on paid media. The Maine bill, for instance, is written to apply only to ads from groups and candidates that report their finances to the state’s ethics commission.

This means that unpaid, organic content, like a video created by a supporter and shared on social media, would likely not require a disclaimer under this specific law.

“Many proposals focus on disclosure requirements for paid or commercial uses of artificial intelligence, reflecting lawmakers’ intent to regulate areas where established campaign finance and advertising rules already exist, while avoiding direct regulation of political speech.” — AI Legislation Tracker, American Action Forum

Lawmakers are proceeding cautiously, recognizing that regulating political speech is constitutionally fraught territory. The focus on paid advertising provides a clearer legal basis for regulation, as it targets commercial transactions that are already subject to a host of campaign finance rules.

However, campaigns should not interpret this as a green light for deceptive practices in the unpaid media space. While a viral deepfake on X (formerly Twitter) might not trigger a penalty under a specific AI disclaimer law, it could still open the campaign up to defamation lawsuits and, more importantly, cause immense and irreversible political damage. The court of public opinion can be far quicker and more brutal than the legal system, and an AI-generated scandal can sink a candidacy regardless of whether a specific statute was violated.

Building a Compliance-First Workflow

As campaigns increasingly rely on AI for everything from generating background images to creating voiceovers, the risk of accidental noncompliance is rising sharply. A junior designer using a new generative feature in Adobe Photoshop could inadvertently create an image that triggers a disclosure requirement under state law. As recent legal analysis makes clear, this risk is no longer theoretical.

“States are adopting their own laws on AI in political ads, creating new obligations for campaigns, committees, vendors, and platforms that operate across jurisdictions. Most focus on requiring disclaimers or disclosures when synthetic content is used.” — BakerHostetler

To mitigate this exposure, campaigns must move beyond ad hoc processes and build AI governance directly into their creative production workflow. Campaigns need to establish clear internal review standards. Any piece of creative that uses generative AI, no matter how minor the application, should be flagged for review by a senior staffer or legal counsel. The individual who created the content should not be the one making the final compliance determination. This separation creates a necessary check to catch potential issues before publication, especially in a legal environment that remains unsettled and state-specific.

Wrap Up

Artificial intelligence offers political campaigns a suite of powerful tools to enhance creativity and efficiency, but the rapid proliferation of state-level regulations has turned the landscape into a compliance minefield. The days of treating AI as an experimental toy are over. The financial and reputational risks associated with non-compliance are now simply too high for any serious campaign to ignore.

Looking ahead to the 2026 midterms, this patchwork of laws will only grow more complex as more states act and existing laws are refined. The campaigns that will succeed are the ones that treat AI governance not as a legal afterthought, but as a core operational function. By building clear review processes, establishing conservative standards for disclosure, and educating their teams on the risks, campaigns can harness the power of AI without falling victim to its legal pitfalls.